On this page

In the high-stakes world of AI infrastructure, we are currently witnessing a silent war. On one side, we have multi-million dollar GPU clusters capable of processing trillions of parameters. On the other side, we have an aging network architecture that was never designed to handle them.

The problem isn't just about "speed", it's about intelligence. As we scale our AI fabrics to tens of thousands of nodes, we’ve discovered that traditional network balancing is failing us. The solution? A breakthrough known as BGP Next-Next Hop Node (NNHN).

If you want your network to stop acting like a blind traffic cop and start acting like a high-speed air traffic controller, read on.

The Blindness of the Old Guard: Static Hashing

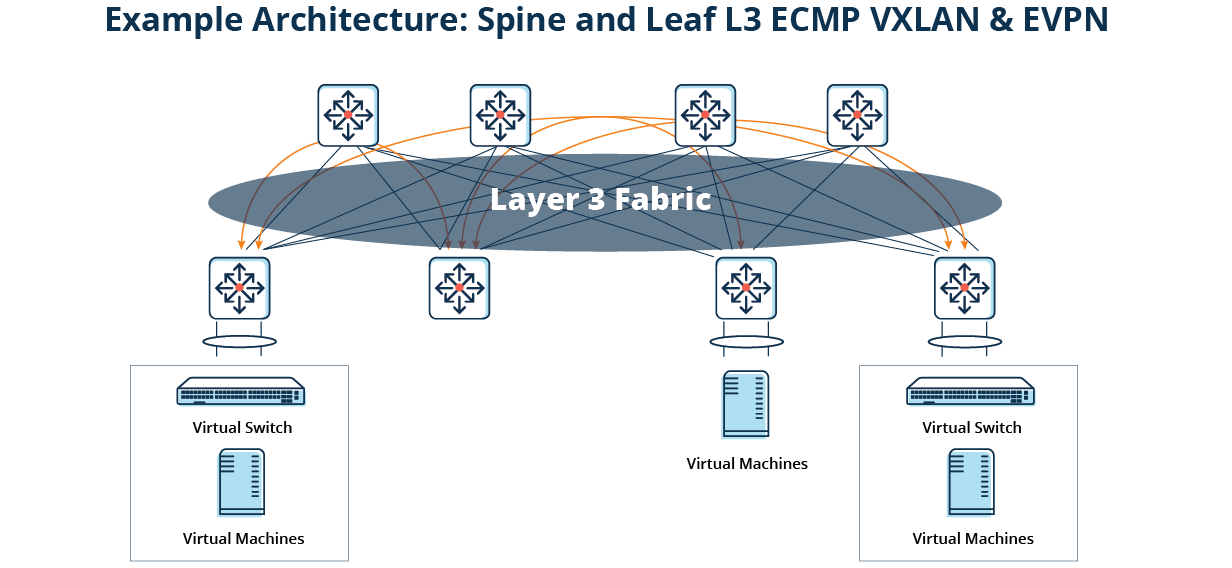

For decades, we relied on Static Load Balancing (ECMP). It was simple and elegant: every time a packet hit a switch, the hardware ran a quick math formula (a hash) to decide which path to take. In a world of millions of tiny "mouse flows", like web browsing or emails this worked perfectly. The law of large numbers ensured traffic was spread evenly.

But AI isn't made of mice; it’s made of Elephants.

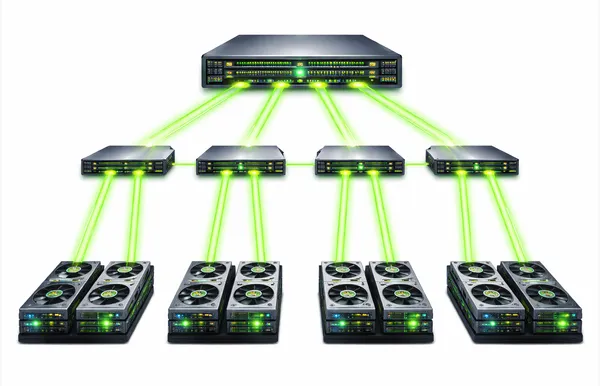

When a GPU cluster starts an All-Reduce collective, it sends massive, sustained bursts of data known as "Elephant Flows." When two of these massive flows get hashed to the same link by a "blind" ECMP switch, that link hits 100% utilization while the link right next to it sits empty. We call this a hash collision, and in an AI fabric, it’s a death sentence for performance.

The Evolution: Why "Local" Isn't Enough

Engineers tried to fix this with Dynamic Load Balancing (DLB). This was a step up—it made the switch "reactive." The switch would look at its own outgoing ports and say, "Wait, Port 1 is getting slammed. Let me move this next flow to Port 2." It sounds smart, but there’s a fatal flaw: Local Visibility.

Imagine you’re driving. You see the road directly in front of you is clear, so you accelerate. What you don't see is the ten-car pileup just around the next corner. This is exactly how DLB fails. A Leaf switch might see its local connection to a Spine is clear, but it has no idea that the path beyond that Spine the link to the Super-Spine, is a total bottleneck. It keeps sending traffic into a "black hole" of congestion because it can't see over the horizon.

Enter the X-Ray Vision: Global Load Balancing via BGP NNHN

To truly feed a GPU cluster, we need Global Load Balancing (GLB). This is the "Gold Standard" for 2026 AI fabrics, and it is powered by the magic of BGP Next-Next Hop Node (NNHN).

NNHN is an evolution of the BGP protocol that finally gives our switches "X-ray vision." In a standard setup, a switch only knows its immediate neighbor (the Next Hop). With NNHN, the network fabric begins to talk. A Spine switch doesn't just tell the Leaf "I'm here"; it also says, "And here are the Super-Spines I am connected to."

By sharing the identity of the Next-Next Hop, the Leaf switch suddenly has a map of the entire end-to-end journey.

The Synchronized Dance of Data

When you combine NNHN with real-time telemetry, the network becomes a living, breathing organism. When a link deep in the core of the fabric starts to sweat, that Spine switch immediately signals back to the Leaf: "Path to Super-Spine A is congested!" The Leaf switch, now armed with "global" knowledge, makes a split-second decision. It knows that while its own connection to "Spine 1" is fine, the path behind "Spine 1" is blocked. It proactively steers the incoming Elephant flow to "Spine 2," which has a verified clear path to the destination.

This isn't just balancing; it's Proactive Traffic Engineering.

The LevelUpIT Bottom Line

We are moving away from the era of "dumb pipes." In an AI-driven world, the network must be as intelligent as the models it carries. By implementing BGP NNHN and Global Load Balancing, we eliminate "tail latency" the annoying delay that keeps GPUs idling while they wait for data.

When your GPU ROI is measured in thousands of dollars per hour, NNHN isn't just an "advanced feature." It’s the difference between a cluster that thrives and a cluster that crawls.