On this page

In environments where every microsecond and every bit of bandwidth counts, traditional unicast and broadcast models fail spectacularly. IP Multicast offers a bandwidth-conserving technology that reduces traffic by delivering a single stream of information to thousands of recipients simultaneously.

| Requirement | Traditional Unicast/Broadcast Drawback | Multicast Advantage |

| Bandwidth Efficiency | Unicast requires the source to send a separate copy of data for each receiver, causing massive traffic duplication on network links and burdening the source server. | The source sends only a single stream. Routers intelligently replicate packets only at points where paths diverge, minimizing bandwidth consumption across the backbone. |

| Low, Deterministic Latency | Unicast introduces variable queuing and processing delays that scale with the number of flows. Broadcast forces every device on a subnet to process the traffic, increasing CPU load and potential jitter. | A single delivery path is established per hop. This creates a predictable queuing and forwarding path, which is critical for maintaining ultra-low latency and minimal jitter. |

| Scalability | Unicast scalability is poor for one-to-many applications. Sending a high-bandwidth video stream to thousands of users would overwhelm most network infrastructures. | Multicast scales effortlessly. Adding more receivers doesn't increase the load on the source or the core network, as new branches are simply "grafted" onto the existing distribution tree. |

| Data Integrity & Compliance | Ensuring thousands of unicast receivers get the exact same data packet at the same time is nearly impossible, making it difficult to correlate timestamps for financial audits. | Since every subscribed receiver gets the same packet from the same distribution tree, timestamp correlation and data consistency for regulatory compliance are vastly simplified. |

For high-definition streaming CDNs and HFT market-data fabrics, multicast, powered by a robust routing protocol like Protocol Independent Multicast (PIM), is the only viable solution.

PIM Overview: The Engine of IP Multicast

PIM is the most popular and widely deployed multicast protocol. Unlike protocols like DVMRP, PIM is protocol-independent; it doesn't maintain a separate multicast routing table but instead leverages the existing unicast routing table (populated by OSPF, IS-IS, or BGP) to make its forwarding decisions. This is a crucial feature for modern network design.

Core PIM Concepts

| Term | Meaning & Architectural Significance |

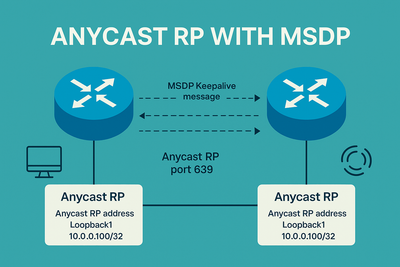

| RP (Rendezvous Point) | A strategically placed router that acts as a shared root for a multicast tree in Sparse Mode. All sources register with the RP, and all receivers initially send their join requests to it. Architectural Impact: RP placement is critical. A poorly placed RP can create suboptimal traffic paths, increasing latency. Anycast RP is often used for redundancy and load sharing. |

| DR (Designated Router) | On a multi-access network segment (like Ethernet), one router is elected as the DR. It is responsible for sending PIM Join/Prune messages upstream on behalf of the receivers on that segment. |

| Join/Prune | PIM messages used by routers to dynamically build and tear down multicast distribution trees. Joins are sent upstream toward the source or RP to request traffic, while Prunes are sent to stop unwanted traffic flow. |

| RPF (Reverse Path Forwarding) | The fundamental loop-prevention mechanism in multicast. A router only forwards a multicast packet if it is received on the interface that provides the shortest unicast path back to the source. Architectural Impact: A stable, congruent unicast topology is a prerequisite for a stable multicast network. Asymmetric routes can cause RPF failures and black-hole traffic. |

| (S, G) State | A multicast routing table entry for a specific Source (S) and Group (G). This represents a source-specific tree, also known as a Shortest Path Tree (SPT). |

| (*, G) State | A multicast routing table entry for any Source (*) and a specific Group (G). This represents a shared tree, rooted at the RP. |

Architect's Guide to PIM Modes

Choosing the right PIM mode is one of the most critical architectural decisions for a high-performance multicast network.

PIM Dense Mode (PIM-DM)

- How it Works: PIM-DM employs a "flood and prune" mechanism. It initially floods multicast traffic to every router in the network. Routers without downstream receivers then send Prune messages upstream to stop the flow. This process repeats every 3 minutes, which can cause periodic bursts of unwanted traffic.

- Use Case: Suited for small, dense networks where active receivers are present on nearly every subnet. It's rarely used today but might be found in isolated lab environments or very small, dedicated clusters.

- Latency & Scalability: Offers very low initial latency because traffic is flooded immediately. However, it scales poorly and is unsuitable for large-scale streaming or HFT networks due to the high overhead and periodic flooding.

- Architectural Verdict: Avoid for any modern streaming or HFT deployment. The periodic traffic floods introduce unacceptable latency spikes and waste significant bandwidth.

PIM Sparse Mode (PIM-SM)

- How it Works: PIM-SM uses an explicit-join or "pull" model, where traffic is only sent to network segments where receivers have explicitly requested it. It relies on a Rendezvous Point (RP) to act as a meeting point for sources and receivers.

- A receiver joins a group (*,G), and its DR sends a PIM Join towards the RP, building a shared tree.

- A source sends traffic, and its DR registers with the RP, sending traffic to the RP along a source tree.

- Once the receiver's last-hop router starts receiving traffic, it can switch to a more optimal Shortest Path Tree (SPT) directly to the source, bypassing the RP for subsequent traffic.

- Use Case: The de facto standard for large-scale, general-purpose multicast. Excellent for video streaming applications where sources and receivers are geographically dispersed and group membership is dynamic.

- Latency & Scalability: The initial latency can be suboptimal due to the extra hop through the RP. However, the switch to the SPT minimizes this for long-lived flows. It scales well to thousands of sources and groups.

- Architectural Verdict: Excellent for video streaming. For HFT, it's viable but requires careful RP placement and ensuring a fast SPT switchover (

ip pim spt-threshold infinityshould NOT be used). For high availability, an Anycast RP design is recommended.